How does Google get information about your website?

We can break it down into three core tasks; crawling, indexing and ranking.

How does Google crawl your website?

Crawling is Google’s discovery process and the aim is to find every single web page, document, image and video on the entire internet. It’s a really tough job because some of these webpages only have one link pointing at them, or sometimes no links at all. Yet Google still needs to find these pages, because they could have some really useful information on them.

When Google first started in the dorm rooms of Stanford University, they couldn’t begin with a single website such as stanford.edu, because webpages are like clusters or neighbourhoods, and tend to link to websites which are similar to themselves. So if you only crawled the Stanford website as a gateway to finding links across the entire internet, you would get a very small and very biased search engine. It would be a very academically focused, potentially politically biased search engine. So they most likely used a human-edited web directory, such as DMOZ, to get a broad array of vetted websites for every topic, country and political spectrum. For many years, Google even hosted a copy of the DMOZ web directory and linked to it from their homepage – as some people at that time still preferred directories over search engines.

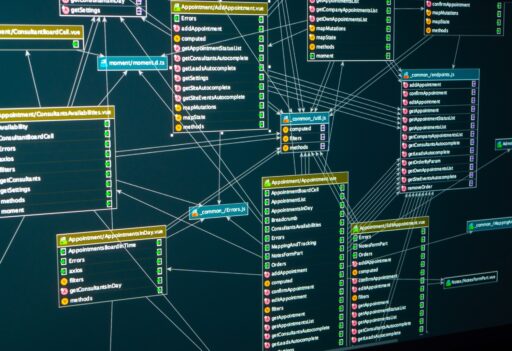

Google has a “robot” called Googlebot. It visits every single website in its “seed list” and scrapes the web pages, then look for links or URLs in the content at those URLs. New URLs get added to a “crawl queue” and Googlebot will visit those URLs later on. It’s a never-ending process of visiting a page, extracting URLs from it, putting them in a queue and then collecting the next URL from the queue. So the entire crawl process is centred around downloading these webpages. And then once they’ve been downloaded, looking for links or URLs within the content.

These days search engines also use something called an XML Sitemap, which are files that the webmaster can put on their website and submit to Google Search Console, or simply link to them in their robots.txt file. It basically gives search engines a list of pages to crawl on your website, so that they don’t have to try and find them themselves. This is useful if your website is particularly hard to crawl, maybe because you’re using a JavaScript framework. It just makes sure that the search engines know about every single page on your website.

What does Google do with all of the web page content that it downloads?

This where the indexing process comes in. These days, Google may try to load the downloaded HTML content in a Google Chrome web browser, to see if there’s any dynamic JavaScript content that they’ve missed. Google actually downloads the HTML content first though, without using Chrome. It’s during the indexing stage that they then execute use Chrome if they think it’s needed. If the content is different after using Google Chrome, they’ll assume it’s a JavaScript-driven website and will need to be rendered. But, as we’ve said in a previous episode, there’s no guarantee that Google will use Chrome to render your HTML and see the extra content.

They save the rendered HTML or “DOM” (Document Object Model) and use it for the indexing process. They then extract all of the important metadata. So that’s your page titles (<title> tags), your meta description, canonical tag – all of the information that’s important to them. This includes Structured Data (Schema.org) for product reviews etc, and content from the page body itself. They identify important keywords within that content, which is actually a very difficult process. Google also checks to see if that content has changed much since the last visit. If it hasn’t really changed much or hasn’t changed at all, they know that they don’t have to visit that webpage very often. Whereas if it has changed a lot since the last time they visit that page, they’ll make sure that they visit that page more often, just to make sure that they always have the freshest content.

Google’s index is now over 100 petabytes in size. Compared to an average computer hard drive, that’s around 200,000 computer hard drives worth of data in Google’s index. So it’s a huge amount of information.

How does all that data transform into search results?

This is where the ranking process comes in. So when someone searches for a keyword, let’s say [mothers day flowers], Google uses their index of keywords to find every single webpage on the internet that mentions Mother’s Day Flowers. It then ranks those pages by relevance, based on how good the content quality is and how important the page is.

Content quality and relevance focuses on the keyword, appearing in the title, description, headings and the content. The content itself must be really relevant and helpful. Their secret algorithm is responsible for determining what is good and bad content. The importance of a web page is governed by the number and the quality of links pointing at that web page.

Nobody in Google knows the entire algorithm, even its founders. This is partly to protect the company’s biggest USP, but mostly because it is so complex and multifaceted that it’s impractical to know every variable. So if someone selling SEO services says that they used to work at Google, that doesn’t mean that they know anything more about the search engine’s algorithm that any other SEO.

It’s a very complicated process, but the important factors are really good quality content (very relevant to the keyword the person is searching for) and lots of third party links from relevant websites, saying “this is the webpage that knows the most about Mother’s Day Flowers”.

This all happens in real-time, which is quite surprising for most people. Unless the same query has been made recently by the exact same person or someone with a similar search profile. In 2017, Google was quoted as saying that 15% of all searches on their website, have never been searched for, ever before. So they do a lot of data processing in advance and keep it stored away in a database. But the most important data crunching and ranking happens, as soon as someone clicks “Search” on the Google homepage.

What if we don’t want Google indexing our content?

Search Engines have all agreed on a three-tier process, for blocking content from them.

The first is called a robots.txt file, that is uploaded to the root (top folder) of your website. It tells search engines what they can and can’t look at. So you can block your entire website from search engines or even specific search engines. You can say certain folders such as your admin or “My Account” folder, can’t be indexed, or you can give it specific URLs that you don’t want to appear in any search engine’s index. You can also tell search engines where your XML Sitemap is located as well in a robots.txt, which is something we talked about just before.

The second tier is called an X-Robots-Tag HTTP header. HTTP headers are basically metadata that gets sent from your Web Server to a person’s web browser, before a webpage is even loaded. So this basically tells the web browser if the page exists (which is where you get your codes 200 OK and 404 Page Not Found). It can also tell the web browser when the page was last modified, which potentially means that the browser could actually use its own saved copy of a page, rather than load it from scratch again. It also tells the web browser what kind of content is on a page as well. But you can configure your web server to serve an X-Robots-Tag HTTP header and this will tell search engines not to index the page or not to follow the links on that page, before Google even downloads the web page. This is where it actually becomes quite handy, because if you can’t put this information in the robots.txt file, you can save your servers a lot of resource and bandwidth, as the search engines won’t bother to download your HTML.

The third tier is a “robots” meta tag. It’s mostly used for individual pages that you don’t want to appear in search results. You basically have a situation here, where search engines have to visit every single URL that it knows about on your website and download those pages, before they can see if you have this robot’s metatag in place. So you actually create a lot of unnecessary pressure on your website for no reason at all. So only use a “robots” meta tag for blocking pages, if it’s not possible to use a robots.txt file or X-Robots-Tag HTTP header.

What happens when you move or delete a web page?

If there’s no longer a page live at a URL that Google visits, the server will display a 404 “Page Not Found” error, which we see quite a lot when surfing the web. Sometimes websites move or delete a page by mistake and because of this, Google will continue to revisit that URL. Even if the page serves a 404, Google will continue to visit that URL for months and months, even years, just in case the page comes back to life.

What if you urgently need to remove a page from Google’s index?

If you’ve posted something controversial on your website or you just need to get it out of the search results ASAP, you can tell your server to serve a 410 HTTP Status code instead of a 404. It tells Google that the URL will never have a content page on it ever again. So Google can just remove it from its index straight away. From my experience, you can remove a URL from Google’s index with a 410 status code within a matter of hours. It’s a very effective way of taking down content.

But it’s a very dangerous thing to use as a blanket rule. You don’t want to accidentally move or delete a page, then have your server display a 410 error. Google may never revisit that page again otherwise, and you’ll have to change the URL and rebuild the link authority.

What’s the correct way to move a web page or change URLs?

You should serve a 301 “Permanent Redirect”, from the old URL location to the new one. This can be done using your web server or sometimes in your ecommerce platform as well. Especially if you’ve got an SEO plugin installed, they will usually have a Redirects option built-in. Those 301 redirects should exist forever. You shouldn’t just put it up temporarily, whilst Google realizes that your page is relocated, then remove it afterwards. You never know when the search engine is going to revisit that old URL. Also, if there are any links pointing at that old URL, you want its authority to flow into the new location. That’s what a 301 Redirect can do for you.

You shouldn’t use a 302 Redirect though, which is a temporary redirect. It’s used a lot in applications by web developers, especially where they don’t quite understand the implications for SEO. The temporary redirect basically tells Google, that the page has moved but it’ll probably go back to its original location at some point. So don’t trust the redirects. And because of that, Google has struggled to handle 302 Redirects for decades. All of the authority and those links that are pointing at the old URL, can’t flow to the new location because, at any moment, that old URL is going to come back to life again. So quite often these 302 redirect pages will struggle to rank well. Sometimes the old URL will actually appear in the search results, rather than the new URL. So it can cause some real issues and some very quirky search results. Only use 302 Redirects behind a login screen or when posting forms, where search engines aren’t likely to find them.

Please Note: The content above is a semi-automated transcription of the podcast episode. We recommend listening (and subscribing) to the podcast, in case any of the content above is unclear.